Data Center vs AI Data Center: Understanding the New Infrastructure Divide

Short, high-impact differences separate traditional data centers from modern AI data centers. This article explains those differences, why they matter, and how organizations can prepare for the accelerating shift toward AI-optimized digital infrastructure.

Table of Contents

- Overview: Why AI Is Forcing a New Data Center Model

- Core Architectural Differences

- Operational & Management Differences

- Economic & Environmental Implications

- The Future of AI Data Centers

- Top 5 Frequently Asked Questions

- Final Thoughts

- Resources

Overview: Why AI Is Forcing a New Data Center Model

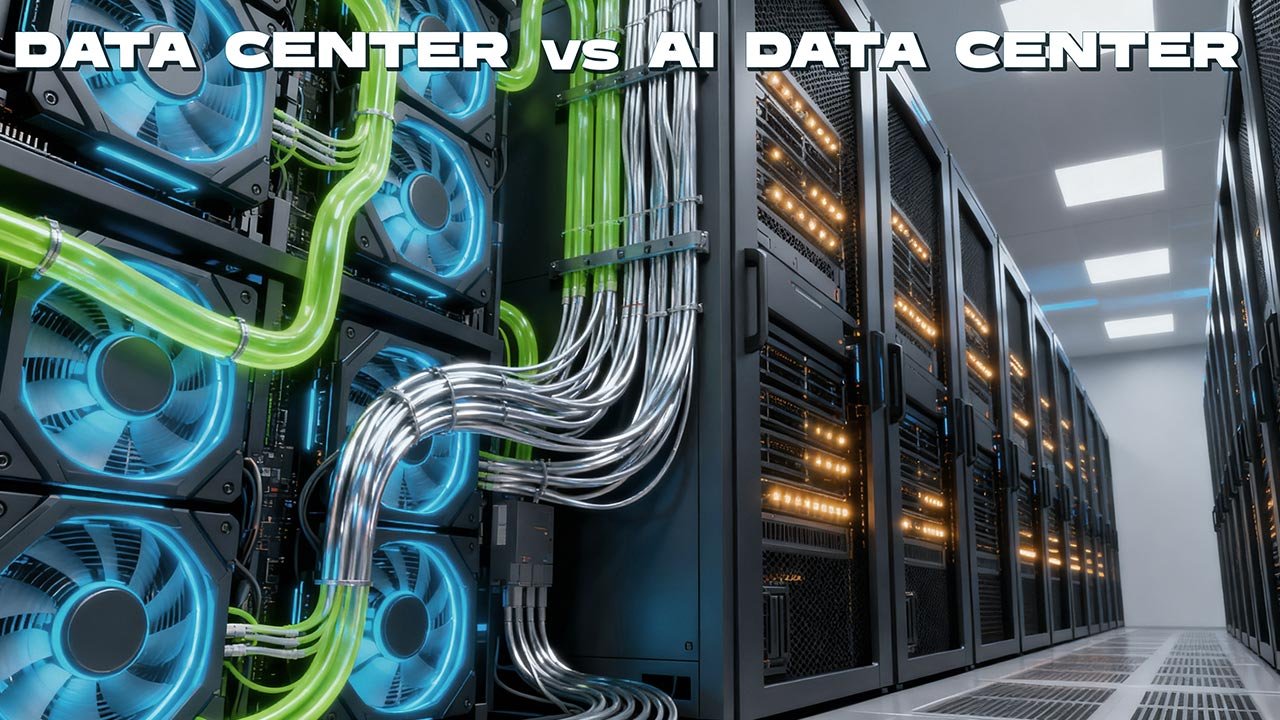

Traditional data centers were designed for web hosting, enterprise applications, and storage workloads. These tasks demand predictable compute cycles and moderate power densities. AI data centers, by contrast, must sustain massively parallel computation, extremely high power draw, and terabit-scale data flows. Training large language models can require thousands of GPUs operating in dense clusters, something legacy data centers were never engineered to handle. This divergence has created a new category of digital infrastructure: AI-optimized data centers with specialized networking, cooling, and power architectures.

Core Architectural Differences

Compute Infrastructure

Traditional data centers rely on CPU-centric racks optimized for virtualization, multi-tenant workloads, and general-purpose computing. Their density typically ranges from 5–15 kW per rack. AI data centers flip this model. GPU clusters now dominate compute stacks, with accelerators such as NVIDIA H100, AMD MI300, and custom ASICs pushing racks beyond 30–100 kW densities. Parallelization is the core design principle: instead of many independent workloads, GPUs collaborate in synchronized compute fabrics. As a result, AI data centers integrate high-bandwidth interconnects (NVLink, InfiniBand) and server architectures that behave like one massive accelerated computer rather than thousands of independent servers.

Networking Models

Legacy data centers employ Ethernet-based hierarchical networks—typically three-tier architectures (core, aggregation, access). These support standard enterprise traffic but cannot meet AI cluster latency or throughput demands. AI workloads require ultra-low latency and extremely high throughput. AI data centers often deploy fat-tree or Dragonfly topologies with InfiniBand or next-gen Ethernet, providing hundreds of gigabits per second per GPU node. The network becomes a critical performance factor. Slow networking can bottleneck training time, increasing costs by millions. AI data centers therefore rely on software-defined fabrics that dynamically tune traffic, congestion, and routing patterns to maintain cluster-wide efficiency.

Cooling & Power Demands

Cooling is one of the most visible splits between the two models. Traditional data centers operate with air cooling and moderate heat loads. AI data centers often exceed thermal limits for air cooling, pushing operators toward: – Direct-to-chip liquid cooling – Rear-door heat exchangers – Immersion cooling tanks for extreme GPU clusters Power draw is equally divergent. Conventional racks draw 10–15 kW; AI racks frequently surpass 80 kW. Some hyperscalers now design AI modules at 120–150 kW. Power distribution units, transformers, and backup systems must be completely re-engineered to support sustained high-load operation.

Storage Requirements

Traditional workloads prioritize reliability, redundancy, and predictable performance. Storage systems are typically optimized around block and file storage. AI training demands enormous sequential read/write throughput and fast access to petabyte-scale datasets. To support these patterns, AI data centers increasingly adopt NVMe-over-Fabrics (NVMe-oF), high-performance object storage, and tiered data pipelines with SSD caching layers. As models grow, storage systems must integrate tightly with the training cluster to minimize I/O bottlenecks that can stall GPU usage.

Operational & Management Differences

Operations in a traditional data center revolve around uptime, virtualization efficiency, and resource allocation. AI data center operations introduce new challenges: – Cluster orchestration to keep thousands of GPUs synchronized – Data pipeline management for training/validation datasets – Automated workload scheduling to maximize GPU utilization – Real-time monitoring of power, cooling, and network latency at microsecond precision AI infrastructure resembles a factory floor where scheduling, heat management, and throughput optimization directly impact cost and competitiveness. Because AI workloads are continuous and compute-intensive, idle GPUs translate to immediate financial loss. This has driven operators to adopt advanced AIOps, telemetry systems, and digital twins for predictive optimization.

Economic & Environmental Implications

The shift to AI data centers is reshaping global energy and CapEx budgets. Traditional data center economics assume incremental scaling and moderate hardware refresh cycles. AI, however, accelerates both capital investment and energy consumption. Key trends include: – AI clusters cost 3–10× more per rack compared to CPU-based systems – Energy consumption is rising sharply, with some hyperscalers projecting AI workloads to represent 20–30% of total power usage by 2030 – Liquid cooling reduces operational overhead by improving efficiency, but increases initial installation cost Environmental impacts are significant. AI data centers must integrate renewable energy sources, power-purchase agreements, micro-grids, and advanced heat-reuse systems to meet sustainability standards and government regulations.

The Future of AI Data Centers

The evolution from traditional to AI-optimized data centers is accelerating. Several trends will define the next decade: – Specialized AI accelerators, including domain-specific chips for LLMs, inference, and edge-AI – Fully liquid-cooled facilities where air cooling is secondary or eliminated – Autonomous data center operations directed by reinforcement learning and digital twins – Expansion of AI workloads into edge micro data centers to support robotics, autonomous systems, and real-time analytics – Green AI infrastructure combining renewable energy and heat recycling Over time, AI data centers will not replace traditional ones but operate alongside them—each serving a distinct category of workloads.

Top 5 Frequently Asked Questions

Final Thoughts

AI is redefining what a data center needs to be. Traditional facilities optimized for general computing cannot meet the extreme requirements of modern AI workloads. AI data centers introduce new architectural principles—massive parallel compute fabrics, liquid cooling, ultra-dense racks, and low-latency networks. The most important takeaway: the future of digital infrastructure will be hybrid. Organizations must plan for two distinct environments—one for enterprise workloads and one for AI acceleration. Those that adapt early will gain a competitive advantage in performance, cost efficiency, and innovation velocity.

Resources

- NVIDIA Data Center Architecture Guides

- Uptime Institute Reports on High-Density Cooling

- McKinsey: The Economics of AI Infrastructure (public research articles)

- AMD Instinct MI300 Technical Briefs

Leave A Comment